How to NOT migrate Nextcloud

When I started on this journey, I thought I was going to write a post about how to move Nextcloud from one server to another. I had a few ideas in my head about how I could go about it. In the end, NONE of them worked very well (for various reasons). So, this is more about how to NOT go about moving your Nextcloud. The silver lining is that I learned a lot and discovered some new tools in the process.

The reason

For the last year or so, I was hosting my Nextcloud instance on a Kimsufi server. I was doing this because their prices were pretty good for a solid 2 TB of storage (roughly $13/month) and a basic server to run it on. This type of high-storage hosted server is pretty rare, even now. Kimsufi is a reseller of OVH servers, as is their sister company SoYouStart (SYS). I actually tried SoYouStart first, because you got more specs for the price, but the problem is the SYS servers run on ARM boards, which doesn't have support for all Linux distributions or software. The Kimsufi ones run Atom processors so you can still use standard Linux distributions.

All was going well, until renewal time. First, you will notice that Kimsufi always seems to be out of stock. So, it's not ideal if you want to just set up a server and go. It's very likely they will not have what you want when you want it.

I thought I was lucky because they DID have what I wanted - but I noticed that their price was now even lower than what I bought it for: $9/month for a 2 TB server instead of $13. I was ecstatic, because I assumed they would let me renew for this lower price. WRONG. They basically told me they would not do anything of the sort, and I would have to migrate my data myself. Being the spiteful person I am, I decided that if I was going to be moving my data it was going to be AWAY from Kimsufi.

Self-Hosting cost issues

As I mentioned above, even in 2020 it's pretty hard to find high-storage servers with a low price. It seems the main providers just don't think this is a worthwhile market. Either they have a pre-built model with RAM measures in 10's of Gigs instead of just 1 or 2. Or they have over 8+ cores instead of just the 1-2 we need.

The providers that do let you add your own attached disk storage charge insane prices (per GB, not per TB). A 2 TB disk will easily cost you more than $60 a month.

Considering this, I decided to split the servers. I can host all my critical services for about $6/month with Hetzner (or Vultr is another good option). This will give me 2 vCPUs, 4 GB of ram, and 40 GB of disk space. This is plenty for hosting multiple applications, it's just a bit light on the disk space.

Even Hetzner and Vultr aren't very competitive when it comes to adding storage. At the time of writing, Hetzner would charge 97.48 Euros per month for 2 TB of storage.

So now that I have my main server, I just need a way to attach some extra storage. The other advantage of doing it this way is that I can leave my main server where it is, and re-evaluate storage options over time with minimal impact to my server.

The Non-Options

Each year, there are more and more cloud storage options. In my opinion, any service that specializes in just that (Dropbox, Box, Google Drive, etc.) are just asking for trouble. My tin foil hat sense goes crazy when I think about storing files with any of these guys. First, who are they sharing it with? Second, how long until someone comes up with a legal reason why they need to allow anyone who asks to scan through my files. Who knows, maybe somewhere in my archives I have a copyrighted image someone wants to sue me for. No thanks. You can keep your storage-optimized services, because they are also optimized for other people find my things and you have proven you can't be trusted.

The Options

It's a slim hope, but I feel better about hosting on raw storage drives, or a raw storage service instead of the services mentioned above. Especially if I'm using something like Nextcloud where I can enable file-level encryption.

There are a few main types we could look into:

- Sound familiar?S3-based Storage

- Self-Hosted External Storage (Ceph, WebDAV, CIFS, etc.)

- External Storage (Ceph, WebDAV, CIFS, etc.)

S3 Storage

On the surface, S3 storage seems like the best way to go. Especially if you're using Nextcloud because it supports S3 as an External Storage device. If you skip past services like Amazon, you can find some really good ones by Wasabi and Backblaze. Both of them offering S3 storage for around $5 per TB per month. This is way cheaper than most others, and also has the benefit of being easily scaled.

The hidden costs

There are three main "gotchyas" with S3 Storage:

- Bandwidth fees

- Depending on the provider, you may be paying fees for ingress and egress (upload and download) of data.

- This means you won't just be paying the monthly rate to storage, but also just for uploading and download it.

- This means the more you access your data, the more it will cost.

- Retention fees

- Each S3 storage provider will have a retention period. This is the minimum period of time that your files will be stored.

- This period is usually 3 months. This means for everything you upload you will need to pay for it for 3 months, even if you just quickly uploaded and deleted it.

- This means that if you upload 100 GB, delete 100 GB, then upload another 100 GB, you will be charged for 300 GB of storage for at least 3 months

- Minimum Usage

- Some of these services have a minimum amount of storage you will be charged for.

- For example, Wasabi has a minimum of 1 TB. So even if you're only storing 100 GB, you will be charged for the full 1 TB.

As you can see, these are some pretty serious considerations if we want to have a place to easily store our files. All it takes is one mistake in uploading your data and you will be paying for that mistake for 3 months.

Self-Hosted External Storage

There are a number of different protocols you can use to mount storage in Linux, and they each have their pros and cons. One major warning I can give about this is be careful about cache usage. For example, mounting WebDAV with DAVfs likes to cache all the files that have been accessed. This means that when you are trying to upload 1 TB of data, it is going to try to cache it to your server's local disk in the process of uploading it to the storage back-end. If you're using a low-storage server (like our 40 GB mentioned above) this is going to kill your server.

Caveat Emptor

In order to Self-Host this type of storage it requires a high storage service. As I mentioned above, this is still hard to come by. So of course I could consider dropping my spite and getting another Kimsufi server - but there's a catch: these services are just giving you a raw hard disk, it has no fault tolerance or back-up built in. This is fine if you plan to keep another copy of your data elsewhere, but since we are going "low cost" it's not likely that we want to spend money for that as well. This is the main reason I didn't just swallow my pride and go back to Kimsufi.

External Storage

Providers offering External Storage use the same technologies listed above: Ceph, WebDAV, CIFS, etc. The main difference is that these providers will handle the setup, up time, and even redundancy for you. These are the key advantages over trying to host your own external storage.

As luck would have it, Hetzner also offers this kind of storage as part of their "Storage Box" service. There are a number of competitors, but since I'm already hosting my main server with them and they offer 2 TB for 11.78 Euros/month - it is really a no-brainer. This is the same cost as 2 TB of S3 from Wasabi or Backblaze, with none of the hidden costs.

Created vs Modified Time

First, you need to just give up the dream that your files will be able to keep the "Created" time. It seems that all the developers in the world have decided that that "Created" time is not when the file was first created, but rather when it was created on the device.

Example

You take a picture in 2015 and save it to a disk. The created time and modified time are both 2015. You then move the file to another disk in 2020. The created time becomes 2020, and the modified time stays 2015. In my opinion, this is wrong, but apparently a lot of other people don't think so. For pictures, we have the benefit that there may be some extra EXIF data to tell us when the picture was taken. For other files, you are out of luck.

What I tried

This is a rough list of what I tried, and the issues I encountered.

- Nextcloud External Storage (S3 Storage from Wasabi)

- Nextcloud External Storage (WebDAV to StorageBox)

- Mounted Storage (DAVfs to StorageBox)

- Mounted Storage (CIFS to Storage Box)

Preface: Nextcloud SYnc Issues

Before we get started discussing any of the specific back-ends, I learned a very hard lesson about the way Nextcloud manages files.

Basically, it has a file cache where it will save mtime and storage_mtime. This is the "Modified Time" of the file. As mentioned above, this may roughly be the time it was created, or the last time it was modified.

This file cache seems to be maintained by Nextcloud internally as files are uploaded, or when you do the occ files:scan command.

The problem with this is if you are trying to change the back-end storage, the cache is not updated - and if it is updated it may get updated to the new back-end storage which may not have the correct modified dates.

I even tried some low-level attempts of updating the modified dates at the DB-level after syncing files, and Nextcloud simply didn't want to accept the new modified times.

There are some sparse comments suggesting that Nextcloud has a way to update the modified time of files directly using an API, but there is no tool for doing this. Their crooked view of how a desktop sync client works is also working against us. In the developer's minds, no one would ever use Nextcloud as a Dropbox replacement where their local hard drive is really sort of a "master". In their view, if you uncheck a file from being synced, it means you want to delete it from your local drive. This also means they give no way to push the metadata from our local drive to the existing files on the Nextcloud server.

To put it simply: If you want to migrate your Nextcloud files to another server, you may as well do a full re-upload of all your files. DO NOT expect to be able to move your files on the server side and keep the modified dates.

Aside from this, their WebDAV implementation is quite broken: lots of File Locking issues, cache size issues, and sync speed issues.

I still like and use Nextcloud, but they are rapidly falling behind by focusing on silly things like "Nextcloud Hub" while some of their core functionality is falling apart.

1 - S3 Storage Attempt

To do this, I utilized the "External Storage" feature of Nextcloud and mounted an S3 bucket at the root folder "/". This was really easy to drop in.

At this time, I was still behind the firewall in China, so uploading all of my files fresh was not reasonable. I still had my data on my Kimsufi server and decided to copy from that server to S3.

Syncing with rclone

I found the amazing tool called rclone. It works a bit like rsync, but it has many more awesome features. It even has a web-based interface for browsing your different file storages. It seems like it can support every online storage service out there (including Wasabi). It is highly configurable and fast. I was able to very quickly push all of my data from Kimsufi to S3. I really cannot say enough good things about this tool.

I had two issues which combined to form an ugly beast:

First - an old bug. There was an old bug in rclone that would cause paths with spaces to only parse the first 1,000 files. This means that folders with lots of files (like time-lapse folders) would always think they needed to sync the remaining files if you ran the sync again. This, combined with S3 storage, meant that each time I restarted the sync, it would re-write THE SAME FILE to the S3 storage. When you consider the hidden costs mentioned above it means that I would have to pay for the same file multiple times for 3 months. This was not rclone's fault, because they had already fixed the bug - but the distro I was using had quite outdated repositories.

Second - file system cache. Similar to other issues I would eventually face, there were caching issues on the remote server. The remote Nextcloud server only had 40 GB of local storage space, and the External Storage with S3 is where everything should end up. rclone is such a powerful tool that it can run multiple threads at once to make your files copy faster. This has the unfortunate side-effect of requiring a lot of extra cache space. If it's going to try to copy 8x 8 GB files, then Nextcloud on the target machine will need to reserve 64 GB of disk space for all the files incoming. If your disk doesn't have enough space for this (like mine) then this will result in the files failing to copy. Then you'll have to re-run the sync to get the rest, and in my case this also meant re-copying many files because of the "first-thousand" bug. Though, even after I fixed this bug I still got stuck because it kept trying the same set of files over and over, which would fill up the cache each time.

The workaround is to manually select which folders to copy, or to reduce the number of threads, or to have more cache space available. The developer for this tool is super responsive, and has commented that he is also considering ways to improve the tool so that it will "blend" between large and small files to optimize cache usage.

It seems that a lot of this is just how S3 works. S3 uses the "modified" time to know which files are now overwritten and can be removed. I think this is quite silly, and there is no standard implementation that allows you to update the modified time of the files in the S3 bucket, because it is built in to its coding to use that date for its own purposes. This means that whatever is accessing the files in S3 would have to maintain its own "modified" date. While S3 is really a cool concept, it's just not meant for this purpose (yet?).

Road block

I thought the hard part was over: copying 900 GB of files. Little did I know, that was the EASY part.

After syncing the 900 GB, I noticed that all of the modified times were wrong. It was showing TODAY, the day I copied the files. This is catastrophic! I had to avoid linking up my desktop client, because Nextcloud would assume that the server has the newer files, and would then force me to re-download all of the files and overwrite the correct files and dates on my local disk! There is no option in the client to get around this, or to push the local dates back to Nextcloud.

This is when I tried to do some low level updates to the modified time on Nextcloud, but no methods seemed to work. So ultimately, even though I could get the data to my Nextcloud server on the remote side, no matter which way I copied it, it would always overwrite the "modified" date to the date it was copied to the new location.

There are some utilities that can mount S3 directly, they basically act as a gateway. This may get around the issue of S3 modified dates, but I'm not sure. Also, many of them seem to be a bit complex to set up and are still under heavy development. I didn't want to have to learn (and test) yet another thing just to migrate my Nextcloud.

Finally, we have to consider the hidden costs of using S3. At this point, my 900 GB of data was being billed as 1.8 TB of space due to the repeated file syncs. I would need to pay for this 1.8 TB mistake for a minimum of 3 months; not to mention that all of the modified dates were wrong. This was one of the cases where it was easier to just burn it all down and start again.

2 - External Storage with WebDAV

By this time, I decided to run away from Wasabi because of all the hidden fees and issues. I just started looking for a simple storage service without all the hidden fees. Thats when I came across, and decided on, Hetzner Storage Box.

Why? Because it's 2 TB for a fixed price, no hidden costs, and I can cancel at any time without needing to pay an additional 3 months of data storage costs.

The Storage Box supports a number of different access methods, so I decided to try WebDAV mounted to Nextcloud as External Storage.

I ran into some of the same sync issues (due to cache + rclone bug) so I eventually just synced directly from the old server to the Storage Box using rclone. Maybe if I continued to use Nextcloud as the "WebDAV gateway" it could have kept the modified dates, but I'm not sure. I don't remember the exact order I did things in. All I know is that at the end of this, I had a new Nextcloud with 900 GB of files with the wrong modified dates and no matter what I did nothing would fix them.

The other disadvantage with External Storage in Nextcloud is that it seems if you change the mount type or mount point, it drops all of the file cache data it had. So when I tried to change from WebDAV to CIFS, it had to rebuild all of the cache and then it definitely lost any of the modified dates it had.

In general, this is an issue with Nextcloud's External Storage. If this is going to change at all, be prepared to lose all of your metadata!!!

Perhaps this could be fixed if Nextcloud provided a tool to sync only the metadata, but they do not.

3 - Mount Storage Box as DAVfs

This approach basically works around the External Storage features in Nextcloud. Instead of letting Nextcloud manage the External Storage poorly, we let our OS mount the external storage to a specific point, and tell Nextcloud to store its data there. There are a few ways to do this, and they're not as scary as they seem.

I tried this approach but I encountered the same issue as with rclone: cache space. As Nextcloud receives files through WebDAV it wants to cache them, and then it tries to pass them to the DAVfs which also wants to cache them. This results in a DOUBLE cache of any files being uploaded which is basically a headshot to our already limited disk space.

Since my server and the storage box are on the same network I thought it would be safe enough to disable the cache, but it seems that DAVfs does not support this. There are some relatively low-level changes required to get this to work, and I didn't want to fight with it.

But, we are getting closer...

4 - Mount Storage Box as CIFS

As far as Nextcloud is concerned, this is the same as us mounting as WebDAV - it just thinks it is reading and writing files from the disk.

At first, my CIFS speed was around 25 MB/s while the WebDAV was around 150 MB/s from the StorageBox. That's why I chose WebDAV first. After poking around, it seems this was not a technical limitation of CIFS, but rather a configuration issue. I never figured it out fully, but it's related to the caching mechanism it uses.

One thing about mounting as CIFS is you need to specify the account it will be mounted under. This may be an issue for other applications that want to mount for specific users, but it doesn't matter for Nextcloud because Nextcloud expects the storage directory to be owned by the web server account it is running under. In the end, my mount / fstab commands look like this:

mount -t cifs -o seal,cache=loose,uid=48,forceuid,gid=48,file_mode=0755,dir_mode=0770,forcegid,credentials=/home/box.txt //account.your-storagebox.de/backup /mnt/storage//account.your-storagebox.de/backup /mnt/storage cifs iocharset=utf8,rw,seal,cache=loose,uid=48,forceuid,gid=48,file_mode=0755,dir_mode=0770,forcegid,credentials=/home/box.txt 0 0

I need to clean up the default permissions they are being mounted as, but this is working really well so far.

With this configuration, it's not eating up as much cache space as DAVfs, and it manages to save all of the meta data for the files.

When I uploaded all my files to Nextcloud I could see the modified date on files are accurate.

End State

By the time I reached the end, I uploaded my data 3 or 4 times. So, really, it would have been easier to just start up a new Nextcloud and upload it fresh using the client.

I ended up using the Storage Box mounted with CIFS and pointing the Nextcloud data directory to it. Once I got outside of China I was able to upload all of my files in a couple days with a fast internet connection.

My costs are ~$5/month for my server (which hosts more than just Nextcloud) and $12/month for 2 TB of data. The benefit of this over Kimsufi is that I'm not gambling with my data being stored on only one drive, the storage provided by StorageBox has lots more redundancy and security measures in place. Also, I can easily move to another storage provider without affecting my primary server.

Other Thoughts

Kimsufi

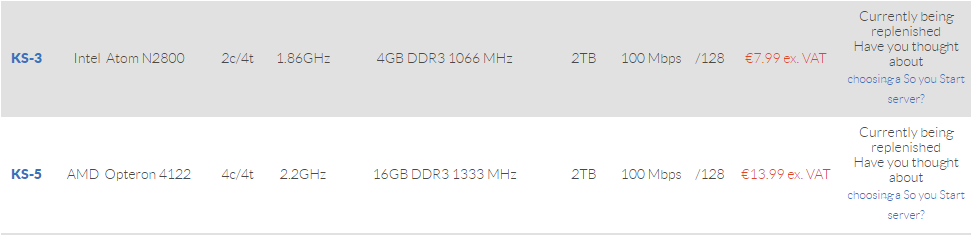

At the time of writing, all the decently priced Kimsufi servers are not available:

And while these prices are tempting, you just have to remember that when renewal time comes around, and all the competitors have dropped their prices, Kimsufi will force you to migrate your own stuff to a new server. Not to mention that there is no data redundancy here!

If I was going for a truly "low cost", and Kimsufi had available servers, it may be worthwhile. But I would still make sure to keep another copy on my own hard drive somewhere.

S3

S3 storage for $5 or $6/TB is not a bad deal at all. If it could work reliably, it would be roughly the same as the Storage Box option. If you're storing less than 1 TB of data, even though Wasabi would charge you the full TB it would still be less than the 1 TB storage box from Hetzner: currently priced at 9.40 Euros. After 1 TB, Hetzner becomes cheaper than S3 per TB, also allows scaling, and avoids all of the hidden fees. You could cancel at any time and not pay more.

No matter what, we still have to remember the S3 and Nextcloud External Storage don't seem to play well together.

Nextcloud Metadata Issues

They really need to get their act together. It's sad that they don't consider cases of user's local hard drive being the "master" in some cases, or provide a way to update only the metadata.

If someone were to write a simple tool to sync metadata from a local disk up to Nextcloud it would fix nearly all of these issues!

If I did it again...

It would all depend on the current market prices of data storage. It will get cheaper over time, and I imagine there will always be those who try to push S3 and those who try to push regular storage like the StorageBox. I guess they will always be roughly comparable in price, because they are both providing redundancy and are both using the same hard drives in their servers.

Since I did end up mounting my own external storage at the OS level (which I didn't want to do), if the S3 prices were comparable I may give it a try to mount the S3 directly to the OS. However, we always need to remember the hidden costs. Even if the cost per TB is the same between S3 and something like a Storage Box, S3 is likely to charge a retention fee even after you cancel. This isn't likely to affect you in the short-term, but if you ever wanted to cancel you can expect to pay an extra fee. Part of me thinks they do this intentionally to encourage you to never cancel their services... :)

Comments